Stephanie Dinkins: Building Something Now

Stephanie Dinkins, #SayItAloud, WebXR experience, 5 minutes 50 seconds plus user interaction time [courtesy of the artist and Queens Museum]

Share:

In a recent conversation, a colleague made the claim that the math supporting the technology under discussion was not cultural. For me, this seemed patently false. The idea that technology and its underpinnings are excused from cultural criticism is connected to the idea of technology as being somehow neutral—a kind of offspring of objectivity in the sense that the claim rests on the separation of knowing from the messiness of human error, bias, embodiment, and, perhaps most importantly and most opaque, our historically situated presumptions. This notion is precisely why artistic practice and other forms of knowledge production should be aligned. Data influences everything we do, all of the time, but revealing it as an apparatus can begin to unmask its attendant ideologies.

I met Stephanie Dinkins in 2018, during a symposium organized by the NetGain Partnership, at the Museum of Contemporary Art Chicago. What I found in my first encounter with her holds true after hours and hours of conversation we’ve shared. Dinkins asks the fundamental questions that are forgotten when you’re too far down the technology rabbit hole. At the precise moment when everyone is completely down with the tech speak, she asks the one question that’s so obvious, everyone else has forgotten the possibility of the present moment: Why are we doing it this way? Even if it makes very little difference in the grand scheme of things—even if that’s true—could we just do something different in this moment?

This conversation flowed organically from technology to family and community … because that is what is at stake.

Joey Orr: You’re an artist who uses technology in your work. [Also] you’re really an artist who’s thinking about community, who isn’t being served by technologies, and how we might expand who gets to participate and express agency in how emergent technologies get formulated. I wonder if you would think with me a little bit about what kind of artist you are. What are the values underneath what you’re doing? Why is it being applied to technology specifically?

Stephanie Dinkins: That’s an interesting question, Joey, because I would never say I’m a technology artist. It’s just the place people have found to put me that makes sense to them. But for me, it doesn’t matter. Although, I do think that technology matters a lot on the social side of things, the community side of things. I feel like I’m an artist who’s always thought about community. I’m an artist because I need to find a place to exist in a way that feels supportive, that understands who I am and who my communities are. How can Black people be recognized as full human beings without having to explain ourselves all the time and without having things come at us, sometimes [things] that we can’t even see, that are trying to hold us to definitions that other people have made? I’m an artist who will use any method I can to trigger small hesitations in others before status quo perceptions are applied. It’s about what I can do to get others on board, even a tiny bit, so that we can have a conversation.

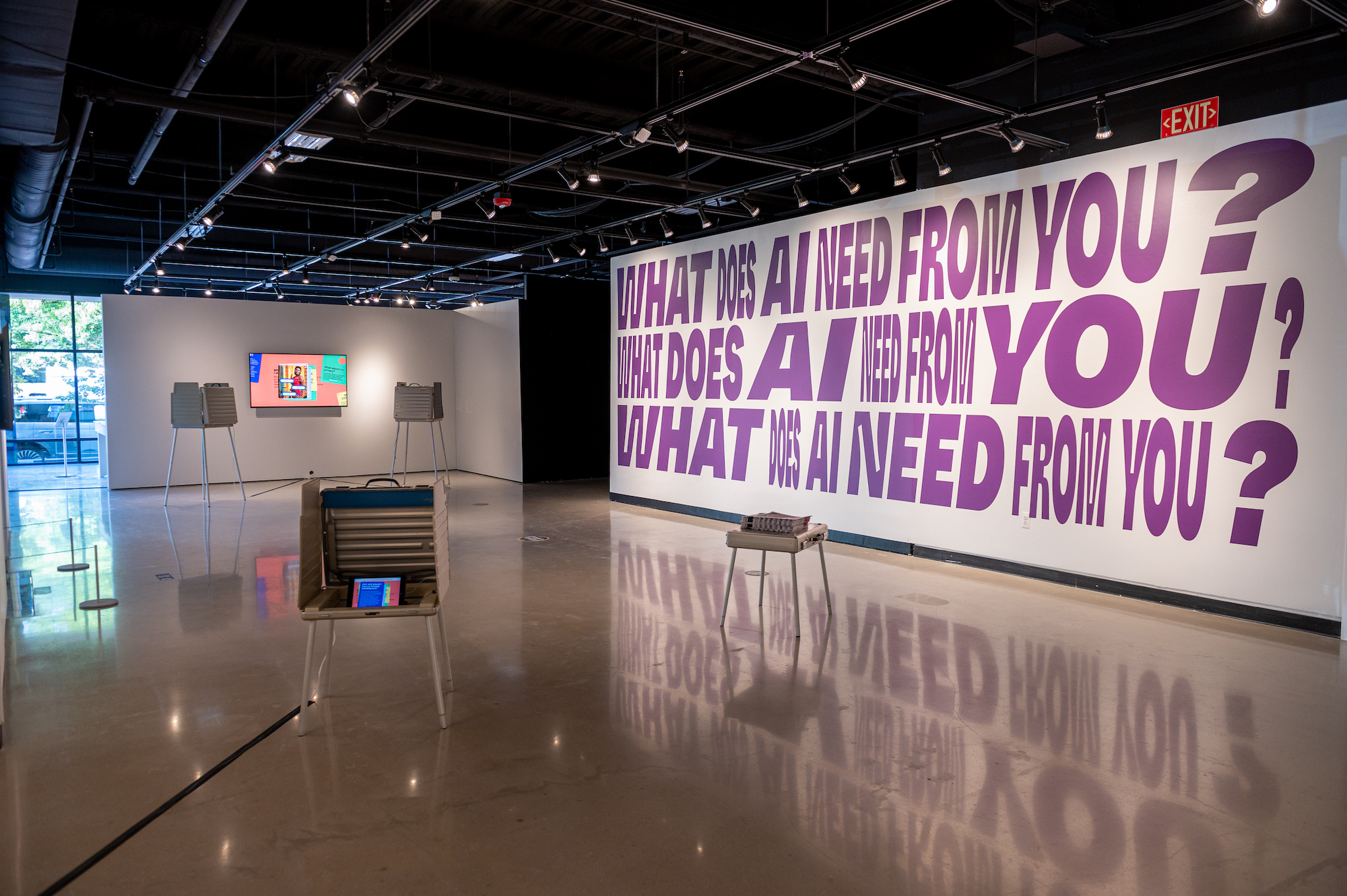

JO: We’ve begun to think about technology in terms of user experience, and so everything’s supposed to be so facile. It’s not supposed to take any work to accomplish the things that we want to jump on and accomplish. I saw your exhibition On Love and Data originally at the Stamps Gallery, before it went to the Queens Museum. I had heard you talk, and I’d been in conversations with you a lot, but I had never actually been in a space with your work before. When I left, I thought it felt so DIY. It felt like, instead of using the technologies for what they were designed to do, you were saying, wait a minute, this is a tool. How is it not serving us? How might it serve us? And let’s play with it at that level where we’re not internalizing all the assumptions the technology rests on. Instead, we’re starting as humans and figuring out how it might extend our humanity.

Stephanie Dinkins, On Love and Data, installation view, 2021 [photo: Eric Bronson; courtesy of the artist and Stamps Gallery, Ann Arbor]

SD: We’re given this stuff, and it’s always beautiful. It’s seductive. It often pulls us into activities we might not want to be involved in, especially when the tech is slick. It has this kind of zhuzh to it, which removes us from its underlying aims. We get more and more removed, and we feel like we have no agency within the tech. The question for me is about the agency—although I will tell you that the DIY is my grandmother’s aesthetic. I grew up with someone who did a lot of DIY work in the house and would give tours and point out the crazy stuff they made. For me, that is art, when you can see people’s hands and minds at work. That’s where the beauty lies. Whenever I see my work in big shows, it looks so wonky next to the other art. In a way, I love that. My work tends to disrupt the fluidity of an exhibition. Because it’s there with all the other art, I think it can change how people think about all the other works. But it’s always such an odd experience for me, because it always does just look like, Oh, does this really belong here? But yeah, it’s here, and deal with it. [laughs]

JO: [laughs] It’s interesting to hear you talk about your grandma. Let’s talk about family for a minute in this piece, Not The Only One (N’TOO) (2018). Can you just tell the story of that work?

Stephanie Dinkins, Not the Only One, 2022-2023, deep learning AI, computer, arduino, sensors , electronics, 16 x 18 inches [photo: Paula Virta; courtesy of the artist]

SD: I can try. Not The Only One is not anything I ever thought I would attempt to make. But because I was talking to Bina48, the robot by the Terasem Foundation, and thinking about what seemed to be its good points, its bad points, and what’s missing, people kept asking me when I was going to make a chatbot. I started to think, if I were going to make something like that, what would it be? What’s going to hold my attention? What do I want to know? I wanted to know more about my family and the stories they won’t tell. Is there a way for me to get to the bottom of some of these stories? We could do some oral histories and interviews. Now, if I start to take that information and combine it with code, that becomes an interesting proposition for me. I started combing GitHub to see if there were repositories that would help me get started. It didn’t take much time to find code to run my data through and get results. I started running some Toni Morrison as data to see how the algorithm would react. I was using basic chatbot code. Then the research became about how to format my informal interviews into some kind of viable data. You need a lot of data. We had 40–50 hours of interviews; that’s really not enough. And then the question of what larger dataset could be the foundation for the piece. Large datasets usually provide language basics and knowledge context, then you can train on more personal or specific information. People would suggest I use things like the Cornell movie dataset, which is a large repository of movie dialogue or, worse, Wikipedia. There are many prefab datasets out there that researchers and others use to build projects and get their inquiries started.

JO: I see.

SD: When I did research around different datasets that I could use, I always came to the conclusion that I’m working with my family’s information, and I don’t want that built on top of something like a US movie dataset. Movies in the United States are very biased and tainted. I don’t like what they reflect back to me, so that’s a hard ask. It’s like, no, that’s not it. Available supporting data hardly ever feels neutral enough to support the history of my Black family. I wanted to make a chatbot, but I didn’t have enough information or acceptable base data to do it well. My next thought was, maybe we need to find a way to work with the limited information, or small data, I had. That’s when I started thinking about small data, or just not using enough data in the project. It’s why Not The Only One is wonky. Every once in a while, N’TOO will say something stunning, but it’s not built on enough data to have fluid or deeper conversations. At first, I thought this was problematic. As I kept putting that piece into public, and it kept not meeting people’s expectations, I started to really enjoy its deficiencies. Now we have a chatbot out there, built on Blackness, that often provided answers that sound ridiculous but that have a logic. It gives non sequitur answers. It refuses to answer. That’s a lot for people to handle. It’s gotten kicked out of an exhibition for not meeting conversational expectations. Still, what’s been happening is people either show N’TOO a lot of grace and they try to work with it, or they’re just mad because it’s not performing the way they expect. How do we allow our technologies to have the space to grow into something without the expectation that it’s going to be a perfect thing created to serve? We’re developing very quickly technology that’s not really adequate to support us as humans in the long run. What does it take to get us to understand that relationship and how it might function?

JO: When Not The Only One doesn’t meet our expectations, it’s pointing to the lack of reliable data that would be necessary to make it function that way in the first instance. It’s one of the things the work is about.

SD: I think that’s true. I think N’TOO’s “brokenness” points to the fact that I refuse to use the unaligned, unsupportive data as a foundation for the piece. I refuse to do that just to get something that works for you. That causes problems, but I also think it’s beautiful. The development of Not The Only One is done in public. It’s actually kind of using an ethos that lots of tech companies use. Put a product out, let it do its thing, let it fail, fix it. Put it out, let it do its thing, fix it. Right? But the point is, I won’t fix it all the way. There are ways I could have “fixed it,” but then the project would lose interest and viability to me. It wouldn’t question the culture.

Stephanie Dinkins, Not The Only One, 2018, deep Learning AI, computer, arduino, sensors , electronics, 18 x 18 inches [courtesy of the artist]

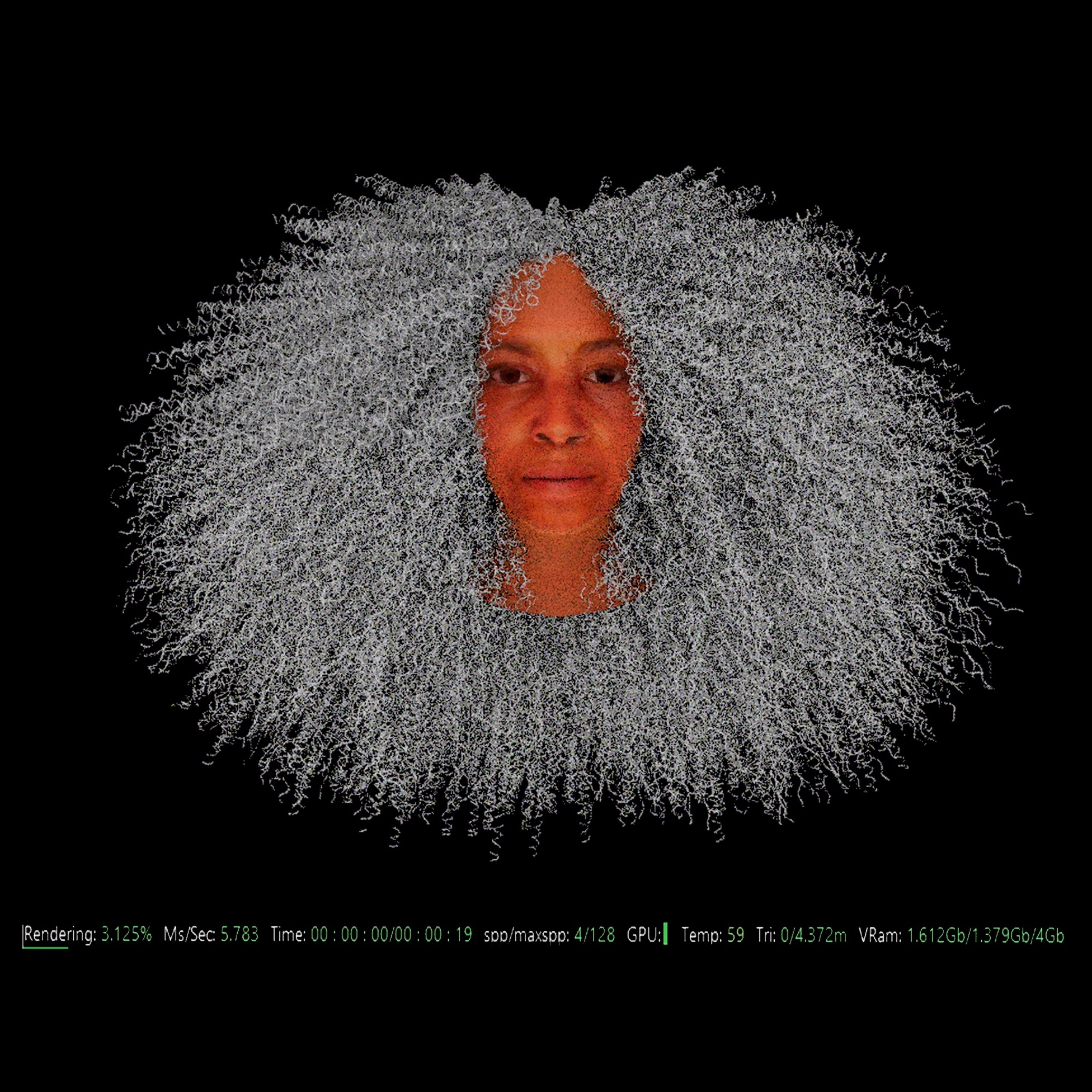

Stephanie Dinkins, Not the Only One Avatar, composite construction [courtesy of the artist]

JO: By “fix it,” you mean make it meet public expectations, which is not the point of the work.

SD: Exactly.

JO: One of the reasons I was thinking about Not The Only One when I originally asked you was because we were talking about community, and you mentioned your grandmother. Could you say something about the members of your family who were really feeding in the information?

SD: N’TOO is based on three generations of women in my family: my aunt, who is my mother’s sister—my mother died a long time ago; myself; and my niece, who is also my goddaughter. We’re each about 30 years apart in age, so I thought it would be interesting for us to have back-and-forth conversations. And it was. My aunt would say things to my niece that she wouldn’t say to me. So, our experiences leapfrogged in certain ways. Plus, it’s a nice composite of experience, some of which overlaps, some of which doesn’t, some of which sees the pride in what’s going on, and some of which is still on the struggle. There’s something nice about that swirl of community in terms of the information base. And, really, we just enjoyed the conversation. The process of making N’TOO was as good for the family and our small community as its development as a project in the world has been. I should add that it’s interesting for Not The Only One to be in the world like that, another reason for its brokenness. There’s some consequential stuff in the data. I now have the burden of caring for my family’s private information. In a way, the project’s brokenness is good. Otherwise, it would reveal private histories.

JO: I hadn’t thought about that piece in terms of how families or even particular communities code, just in regular speak, and how writing code might be leveraged for protection in the same way.

SD: I think that goes very deep in terms of family community. One of my favorite things that it’s ever said is “take it to the would-be.” For me, that statement is akin to my grandmother’s coded way of telling us how to view, interact with, and think about the world. Nana would make odd, hard-to-decipher proclamations that we’d have to ponder to understand what the heck we’d been told. This piece is great for me. I don’t know how the world sees N’TOO, but in an intimate setting, it’s a fascinating thing to play in: that idea of coding and information, or even the things that I was first interested in, like the stories that don’t get told, and then that kind of obfuscation, that kind of hiding of things, endured in the name of survival. It is all in there.

JO: I want to push a little bit further on the original question about how we understand your artistic practice. It seems to me that you make community-generated work. I’m thinking about Not The Only One. Secret Garden. AI.Assembly. I wonder if community-generated work sounds right. And if so, how has that played out in one or two of your projects that people might be familiar with?

Stephanie Dinkins, Secret Garden, 2021, immersive web experience [courtesy of the artist]

SD: I would maybe think community-concerned work. And then if the community is involved in the generation of the work, that’s even better. For me, it starts with a concern with the way things fit and the way people can be whole. AI.Assembly is really interesting, because we don’t have hard goals. We get together. I’m pretty adamant about not having goals or outcomes burden the process. The idea is to simply get together, think together for a little bit, play together, and then, hopefully, relationships become deep enough that people work with and support each other outside of the event. AI.Assembly is community concerned and hopefully generative. I understand AI.Assembly to be successful when participants don’t want to leave each other. Enough bonding takes place that people feel nourished, attached, and supported. It’s the bonding and walking with that that I find important. Secret Garden is a really different experience. It was a community-generated production. It went through a roller coaster ride of changes. But in the end, my original ideas survived the process. It became a work I’m really proud of, because I felt the project emerged from a battle [over] what is important and to whom. That’s an interesting kind of community generation, because all of us working together in a sometimes convivial, sometimes adversarial way, impact each other. Sometimes you agree, sometimes you don’t, but you’re all working towards this one aim. We’re thinking about it; we’re talking about things. I don’t see how the process doesn’t impact all involved in some small way. These are very different kinds of community generation, but each is important.

JO: I’ve heard you say you’re not a gallery artist. And listening to you talk about this sort of community-concerned work, there’s even a level of authorial control that you’re not invested in.

SD: Yes.

JO: But when I walk into On Love and Data, it’s obvious that you’re behind it. You’re not a gallery artist; you’re not supporting yourself on the sale of your work. You’re an educator. How do you describe yourself? Not an auteur, but what instead?

SD: [laughs] There are maybe two answers to that. I’m a person who wants to be able to be creative and go on these adventures, sometimes with other folks, sometimes not, and have some kinds of outcomes that other people encounter and have to deal with. That’s just being human and finding a way to produce a quality of life that is sustainable. Simple, right? But then there’s the bigger question of being an artist in the world. The heavier thing. My practice as an artist allows me to be involved in a grant that allows me to have a lab that allows me to support people and help other people realize their goals, which I recently discovered I find really gratifying. So, I want enough name recognition that people understand who [I am] and what I do, and that I’m an entity to be considered. I don’t want so much name recognition that people fawn over me. We’re just people in a room. We’re all thinking. Some of us get to put our ideas on a wall in certain institutions. That’s all there is. I’m interested in making space for other folks who want to be able to do something similar without getting stuck in a particular creative rut. Although I do feel a little bit stuck right now. My goal is to keep positioning the work as research, having enough outcomes so that they can be put in the world and sustain just enough momentum to continue doing the work I am privileged to get to do, work that feels dutiful, and work I truly desire to do.

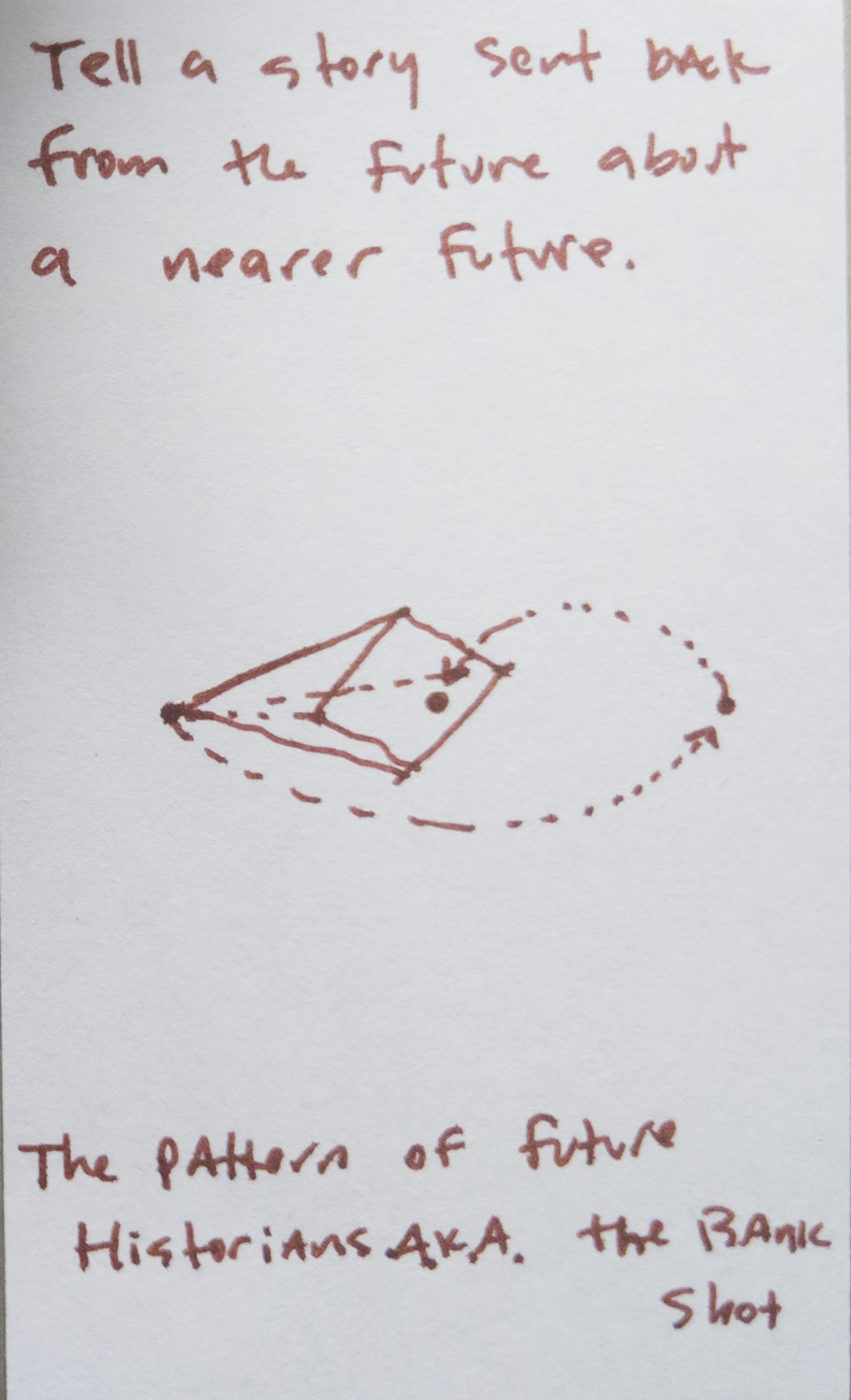

Stephanie Dinkins, anonymous note from AI Assembly workshop provocation: Story Bankshot, 2019 [courtesy of the artist]

JO: I was rereading your “Afro-now-ism” manifesto. Manifestos are of [their] moment. They have a punch. They mean to speak to the urgency of the moment in which they’re created. It was published in 2020.

SD: Yeah. So, what was 2020? All that turmoil. Ironically, Rodney King always comes to mind when I am trying to recall George Floyd’s name. King was really pivotal for me. I left my job after the Rodney King uprising. The cycles of brutality—[from] protest [back] to repressive status quo—are dizzying. And you see Black people being continuously pointed toward an “out there” future. More than 30 years later, we’re still fighting for elusive equality that’s in a speculated future. But what about now? What tells us we can’t find joy, self-defined success, and an intrinsic sense of equality in the here and now? What would it take to enact those ideas? I think many people consciously or subconsciously adhere to capital-tied goals and/or detrimental ideas that uphold a social order that is not supportive of people of color.

Can we even imagine goals separate from the sick society we live in? For me, it’s about trying to stop being driven by goals and methods that were never meant to be accessible to the majority, let alone minorities. Surely, we can devise practices that are more supportive for us individually, and as a community. Black folks in America have done this for eons. I feel like a lot of Black artists get seduced into making work that harkens back to our past and all the harms. How do we start to acknowledge and understand the history, but then make whatever it is we want to make [that’s] not based on stuckness or nostalgia for this past? I know there’s a history, but why does much of our work have to be based on that history or speculative futures to be recognized by the establishment? Why is that the attention economy? What attentions would we create for ourselves outside of the greater art ecosystem?

JO: Those are big ones.

SD: Yeah.

Stephanie Dinkins, Conversations with Bina48 (still), 2014-ongoing, digital video installation [courtesy of the artist]

JO: You’re talking about these repeating cycles. I grew up in Atlanta in the 70s and 80s, during the time of Atlanta’s missing and murdered Black children. When I was a kid, I grew up with this repeated phrase on media—it’s 9 o’clock, or whatever, do you know where your children are? One of my best friends—we were maybe seven, and he was a Black kid—and we were walking through these woods together, and a White man came at us. I froze, but my friend shoved me, and we were able to run away. I’ve thought a lot about the fact that how he was trained to protect himself may have saved my life.

SD: I think about this a lot. What does it mean to be trained, and what’s the legacy of that training? In response to your story, what does it mean to be trained to be safe? When a Black parent urgently demands an adolescent stand down in public, perhaps it’s because they fear a life is on the line. In some situations, even though the thing you most want to do is clap back because you know a situation is unfair, you know it’s wrong, but the best chance of survival is to hold your tongue. I think this depends on where people are from and what they’ve learned themselves, but seeing such an interaction on the street, you might think, why is this parent being so mean to this kid? You also have to think about what love looks like, and what the history that informed the situation looks like.

I also wonder how you train people under siege to have agency, know their self-worth, and participate in a society without stifling their ability to participate fully, without dimming their shine, because they and their people have experience-based fear that having an encounter with the wrong authority might get you killed. Still, I want Black people to feel free enough in this country to do things like drive across the country—without fear. I often encourage people to take the drive, it’s a beautiful thing to do. I’ve done it multiple times. I find, people are for the most part good, or at least okay. There are, of course, spaces that might hold danger. We understand this, but to let that stop you from enjoying what is yours is a painful thought for me. It makes the world so small. So, so small.

I’ve lived in Bed-Stuy, Brooklyn, for about 20 years, but I grew up in Tottenville, Staten Island, which is only about 20 miles away. Even though we were one of a few Black families in a mostly White community, I knew I could call on the police. When I moved to Bed-Stuy, I had to learn to not call the cops—not to do the thing that, at times, seems most expedient. I needed to retrain myself to use the underlying informal neighborhood system of care, because I’m more concerned with a Black man getting needlessly shot than with property stolen from my car, for example. Going to the mayor or governor of the block is a system that arose because of other kinds of training, of the people who live in that area and [of] the police. I wonder if oppressed people’s worlds can be enlarged and lived in without all the fear underneath, or at least with a logical sense of what’s possible in their world, but also knowing what to do if something goes down. Yes, I think a lot about what it means to live a free life. Is it possible, or not, and what do we do to actually get there?

JO: We started off with you saying you don’t think of yourself as a tech artist. And you’re not actually talking about technology right now, but everything that you’re saying is so descriptive of the actual work that you do as an artist when you are engaging with emergent technologies.

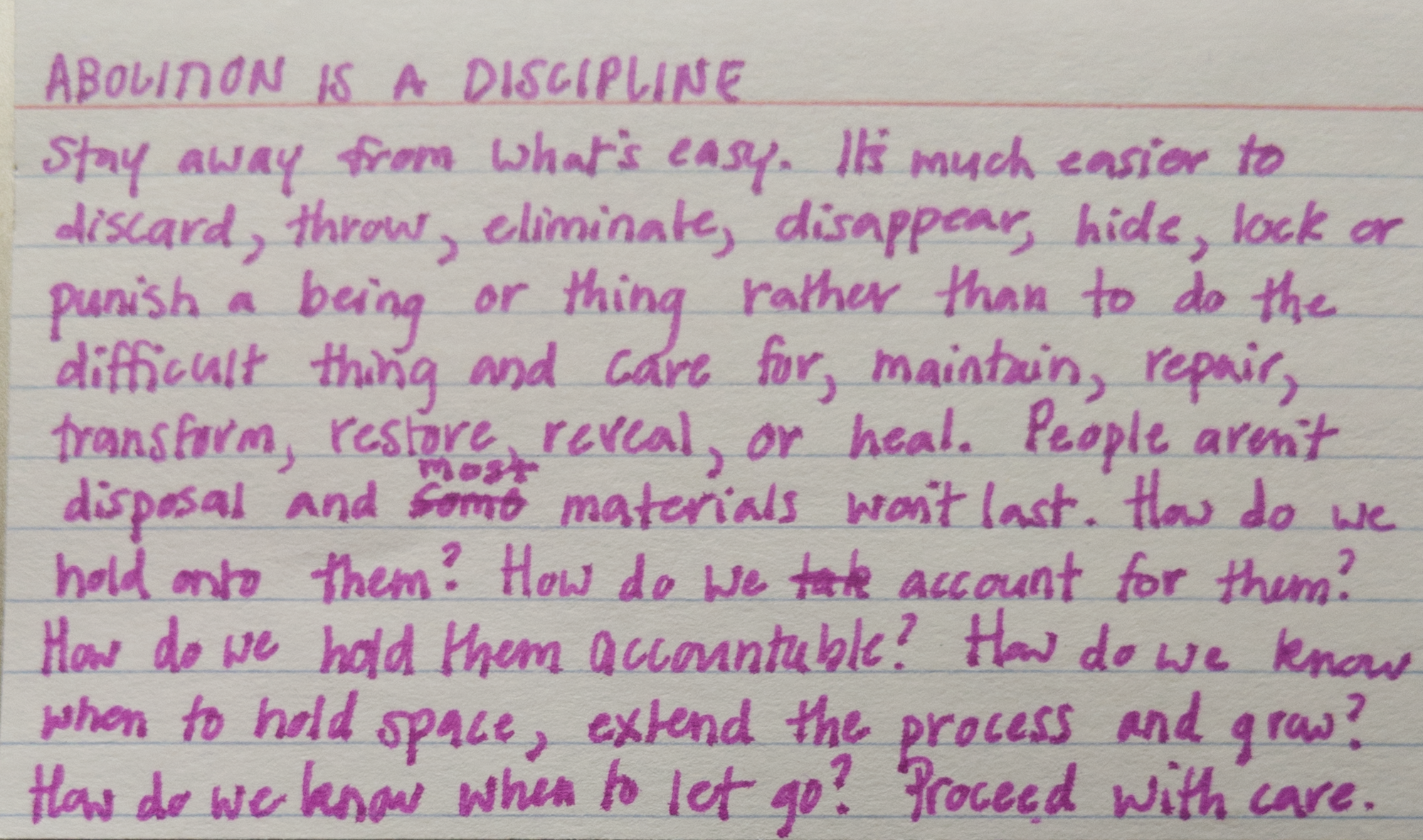

Stephanie Dinkins, anonymous note from AI Assembly workshop: provocation: ABOLITION, 2019 [courtesy of the artist]

SD: Yeah, well, Joey, the thing I always come back to is this: The role of artist is the best way I’ve found to explore my concerns about America and present those ideas in ways that make sense to me. At the core, I am interested in perception, belonging, and the ability of Black people to be whole in this society. Until we get a handle on our worst impulses, even with the best technologies in the world, we’re going to produce similar outcomes. Technology ingrains itself into so many systems we rely on, in ways that we can’t even see. That impacts the potential for freedom for Black folks—everyone really. Yet, we’re dealing with the same racist crap we’ve always been dealing with, or refuse to deal with, actually. So, how do we get closer to truly unraveling our original problems so that any tech we build on top of our systems might have a better chance of supporting the majority, which is to say people of color?

JO: You mentioned, earlier in the interview, that you were feeling a little bit stuck. Would you mind saying something about where you are with that, and what you mean by that?

SD: Yeah, I’m feeling a bit stuck because I’ve grown to hate computers. Everything I do these days is on a computer, and, you know, the year of Zoom teaching. I’m not a coder. I’m not somebody who’s naturally taken to this form of expression, so everything takes me forever. I’m just sick of being tethered to this thing. So, how do I start to disentangle myself so that I can do some other things and only touch a computer when necessary? As I sit here talking to you on my computer. [laughs]

JO: I have a kind of a parallel experience, working in institutions. I always describe my decision to work within museums as being an ambivalent steward. Whenever I wonder why I’m still working in museums, the next thought is, who takes my place? I want people within our institutions who are not there simply to perpetuate them but, instead, to hold them accountable and dream about what they might become. If you lose all of those people, and you replace them with people that just trust everything all the way down, then our whole society loses a really important level of critical engagement.

SD: This is a really big question for me, because every time I try to say I’m done, I wonder, what if I’m not doing the work or doing these explorations? People need to be in places where they’re unexpected and questioning the expected. It’s one reason I will take residencies in impactful institutions. There’s a toll to that, though. I’m willing to go in, but man! I’m comfortable being a fish out of water, so I can do that. But at the same time, working from the inside out is alienating. Clearly, I think these assignments are important enough for me to take on, but building a bit more rest into my workflow would be good. However, I have access that allows me to get some things done at the moment. To squander that access would be missing opportunities for tiny change. So, let’s see what we can build. Or let’s see what we can give some other folks to build.

Joey Orr is the Andrew W. Mellon Curator for Research at the Spencer Museum of Art at the University of Kansas, where he directs the Integrated Arts Research Initiative and is affiliate faculty in Museum Studies and Visual Art. He previously served as the Andrew W. Mellon Postdoctoral Curatorial Fellow at the Museum of Contemporary Art Chicago, where his major project aligned three exhibitions around artistic research. Recent writing has been published in ART PAPERS, Art Journal Open, BOMB, Hyperallergic, Journal for Artistic Research (Network Reflections), and Sculpture. Juried writing has been published by Antennae: Journal of Nature and Visual Culture, Art & the Public Sphere, Capacious: Journal for Emerging Affect Inquiry, Images: Journal of Jewish Art and Visual Culture, Journal of American Studies, PARSE, QED: A Journal in GLBTQ Worldmaking, Visual Methodologies, and a chapter in the volume Rhetoric, Social Value, and the Arts (2017). He holds an MA from the School of the Art Institute of Chicago and a PhD from Emory University. An Atlanta native, Orr founded the public art program ShedSpace (2000–2004), worked with the Museum of Contemporary Art of Georgia (MOCA GA) during its early years, and is a founding member of the idea collective John Q.